- Prometheus statsd exporter update#

- Prometheus statsd exporter Patch#

- Prometheus statsd exporter series#

In this case, the node responsible for proxy traffic writes the data to a StatsD exporter, and the node responsible for Admin API reads from Prometheus: For example, one or more nodes serve only proxy traffic, while another node is responsible for serving the Kong Admin API and Kong Manager. It is not uncommon to separate Kong functionality amongst a cluster of nodes.

Prometheus statsd exporter series#

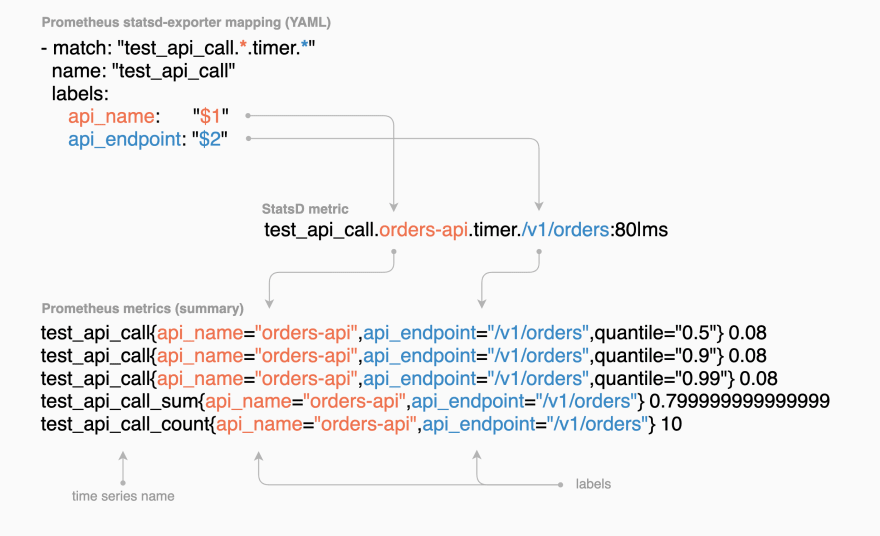

Prometheus does not ever directly scrape the Kong nodes for time series data. Kong then queries Prometheus to retrieve and display Vitals data via the API and Kong Manager. Prometheus scrapes this exporter as it would any other endpoint. In this design, Kong writes Vitals metrics to the StatsD exporter as StatsD metrics. This integration allows Kong to efficiently ship Vitals metrics to an outside process where data points can be aggregated and made available for consumption by Prometheus, without impeding performance within the Kong proxy itself. Kong Vitals integrates with Prometheus using an intermediary data exporter, the Prometheus StatsD exporter. Leveraging a time-series database for Vitals data can improve request and Vitals performance in very-high traffic Kong Gateway clusters (such as environments handling tens or hundreds of thousands of requests per second), without placing addition write load on the database backing the Kong cluster.įor using Vitals with a database as the backend, refer to Kong Vitals. This document covers integrating Kong Vitals with a new or existing Prometheus time-series server or cluster.

Should we handle such situation? I think it requires some kind of signalization about config reloads from main to exporter. In theory one would want to expire a stale metric with ttl: 30m without reload by decreasing ttl to 1s - this is not possible now. Modified ttl for a metric will not be in use until call to handleEvent with a new value for metric. The exporter allows live-reloading of the configuration, so the label set or TTL of a given metric can change during the lifetime of the exporter.

Prometheus statsd exporter update#

Please update the documentation (README) as well. It would be great to hear your thoughts on this.Ĭould you please add tests for the added mapping fields, and tests that demonstrate/verify the expiry behaviour? If no metric with the same labels values received for ttl seconds, then statsd_exporter stops reporting metric with this labels values set. Exporter saves each labels values set and updates last time it receives metric with these labels values. Ttl is a timeout in seconds for stale metrics. Add key ttl to default section and to mapping sections. implement labels values storage in Exporter to detect metrics staleness.So Elements in CounterContainer stores CounterVecs instead of Counters.

Prometheus statsd exporter Patch#

The patch is divided by 3 parts to simplify a review: Removing metrics with this stale values will not cause any damage to subsequent aggregations or multiple prometheus servers. There is no sense to store metrics with old pod names as they are never repeated. For example, metric ingress_nginx_upstream_retries_count with label pod and changing pod name as a value for this label.

There are metrics from Kubernetes pods with constantly changing values for labels. Our monitoring installation is suffered from the behavior desribed in #129. This patch implements clearing of metrics with variable labels with per-mapping configurable timeout (ttl).

0 kommentar(er)

0 kommentar(er)